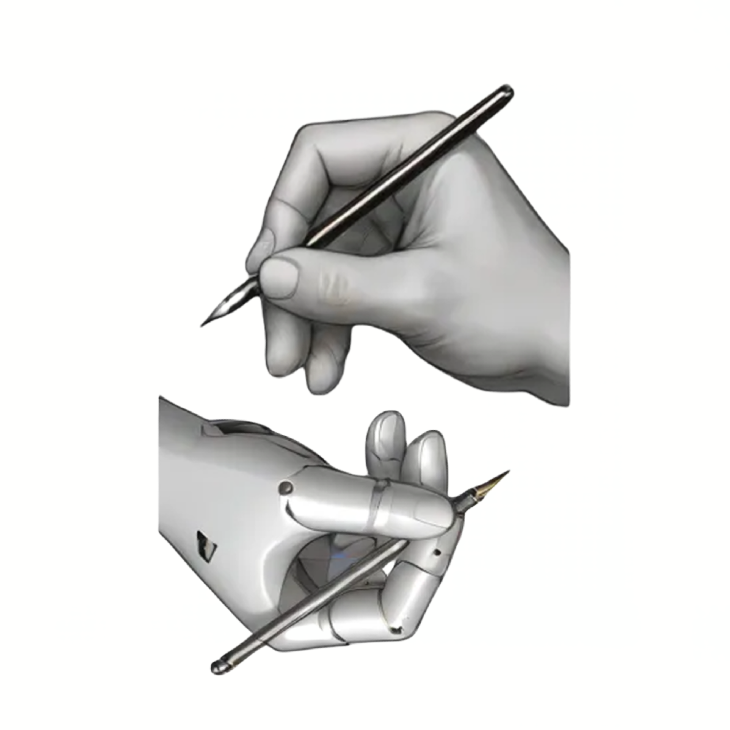

In the realm of artificial intelligence and machine learning, the evolution from image to video diffusion models marks a pivotal leap. This transition is not just an extension of complexity but a fundamental shift that introduces a new array of technical challenges. Our focus is on understanding the fundamental challenges that this leap introduces.

Mastering the temporal labyrinth

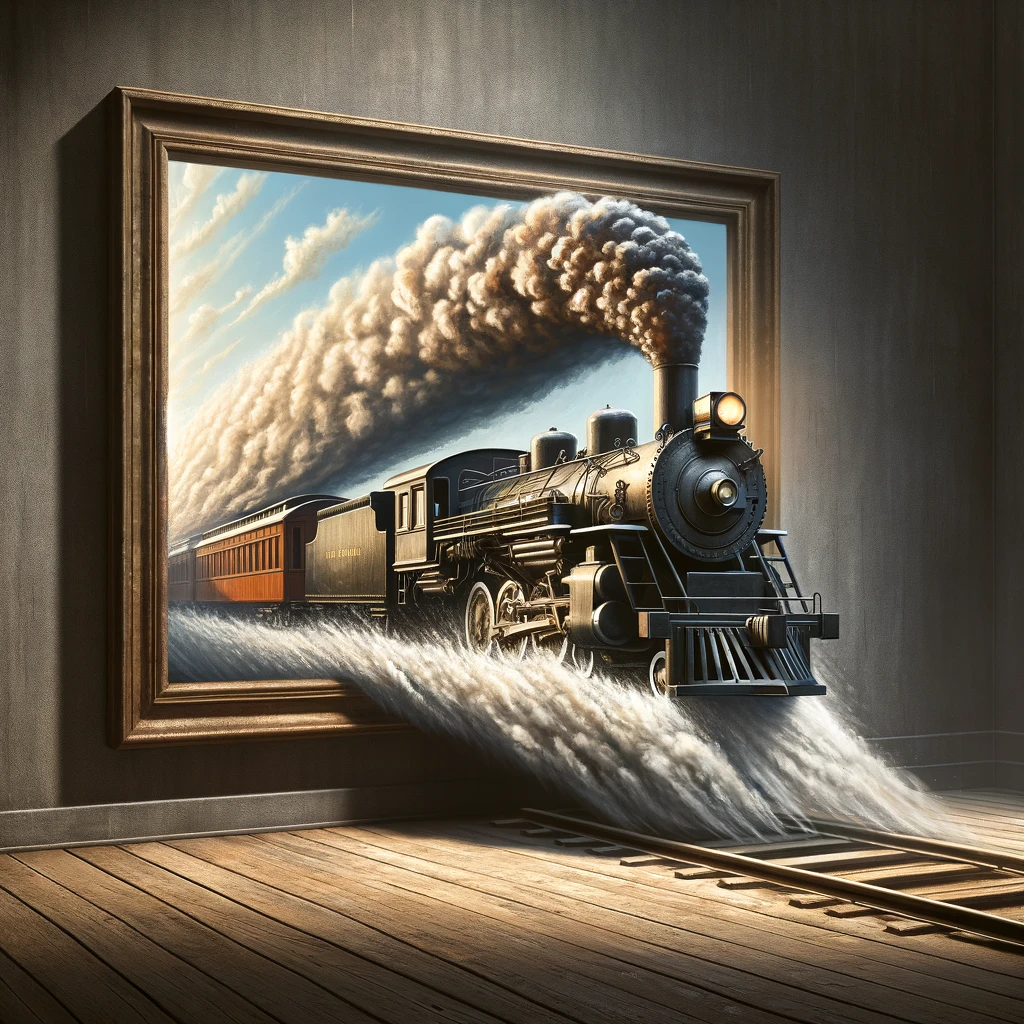

The journey into video diffusion models ventures into the intricate labyrinth of temporal coherence, a domain where each video is not just a collection of images but a narrative woven in motion. This realm demands a meticulous orchestration of frames, crafting them not only with high fidelity but also threading them into a seamless narrative arc. The challenge transcends the realm of mere technology, entering the space of narrative storytelling. It’s about preserving the continuity of moments, ensuring that the transition from frame to frame is not just smooth but meaningful, maintaining the essence of motion and story.

The quest for Efficiency and Fidelity

The shift from static images to the dynamic world of videos presents a daunting escalation in data volume. Videos, being sequences of images, catapult the amount of data to be processed, mounting a computational Everest. This challenge is dual-edged: it is about devising strategies that can efficiently process this colossal stream of data without compromising the depth and quality of the visual narrative. The core of this challenge lies in balancing the scales of computational efficiency and the fidelity of the rendered output, ensuring that the essence of the video is not lost in the quest for efficiency.

Advanced data representation and processing

Representing and processing video data effectively is akin to weaving a complex fabric, where each thread represents a spatial element within a frame and the weft, the temporal dynamics that bind these frames into a coherent flow. This challenge requires a nuanced approach in model architecture, one that is capable of capturing this intricate weave of spatial and temporal data. The sophistication needed here is not just in understanding the individual elements but in interpreting the grand tapestry of motion and change they compose, enabling the model to perceive and generate the fluidity of real-world dynamics.

The Diverse Data Expedition

The landscape of training data for video diffusion models presents its own adventure. Unlike the abundant repositories of images for training static models, the treasure troves of high-quality, diverse video data are far scarcer. This scarcity represents a significant exploration challenge, potentially constricting the models’ capacity to generate a spectrum of realistic and varied outputs. The quest here is not just for quantity but for diversity and quality, seeking out or creating data sets that provide a rich palette of real-world dynamics, ensuring the models trained are as versatile and creative as the reality they seek to emulate.

Looking Forward

As we navigate these challenges, the path forward is fraught with technical intricacies and innovative demands. Yet, these hurdles also represent opportunities for growth and development in the field of video generation.