The world of artificial intelligence has seen a boom recently, and at the forefront of this revolution are Large Language Models (LLMs). These models, ranging from open architectures suchs as Llama to hybrid or paid-per-token as OpenAI GPT, have captivated the imagination of the public and industry alike with their ability to generate detailed, nuanced, and context-aware responses.

They can help draft lengthy articles, write poetry, solve complex coding problems, and even provide customer support that almost feels human. But as impressive as these models are, they come with some substantial trade-offs. That’s where Small Language Models (SLMs) enter the picture, quietly but effectively meeting needs that LLMs can’t. Today, let’s dive into why SLMs are becoming the go-to solution for many practical AI applications, and why they might just be the real game-changer.

The Allure and Limitations of LLMs

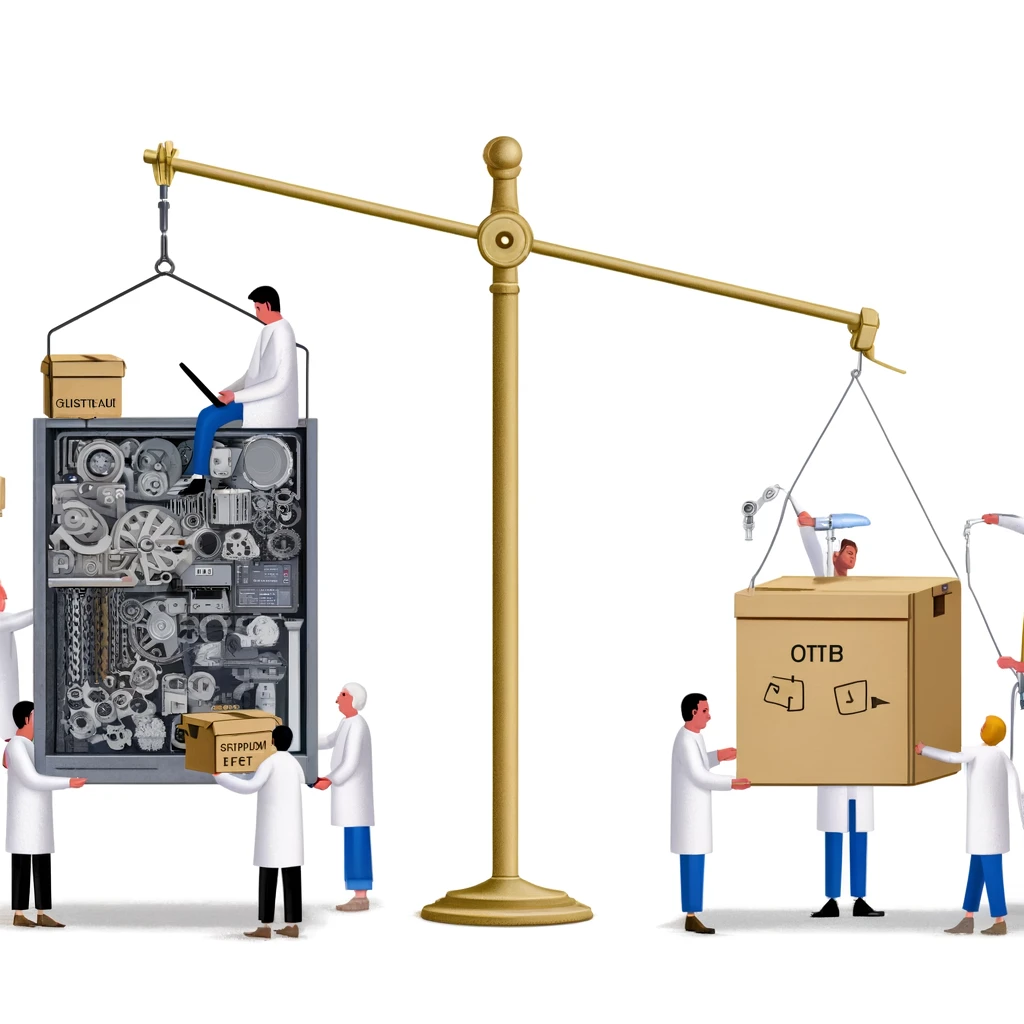

There’s no denying that LLMs have redefined our understanding of artificial intelligence. Their massive datasets and billions of parameters make them capable of incredible feats—everything from generating original stories to translating rare languages. Yet, these benefits come at significant costs.

1. Resource Hungry

LLMs are powerhouses, and with that power comes an insatiable hunger for computational resources. Training an LLM like GPT-4 requires enormous datasets, tens of thousands of GPUs, and huge amounts of electricity. Running these models requires data centers that consume megawatts of power. The expense of training and deploying these systems means they are accessible only to well-funded organizations or research institutes.

2. Latency and Speed Concerns

Given their enormous size, LLMs also tend to be sluggish. The sheer volume of parameters to sift through means that processing a query can be time-consuming, which is a significant drawback when it comes to real-time applications. When users need responses in milliseconds—for customer support or voice assistants—this lag can be a serious bottleneck.

3. Privacy Concerns

The expansive architecture of LLMs often means they rely on cloud-based solutions, making them vulnerable to data breaches. Sending sensitive information to external servers to process adds risk, particularly in sectors like healthcare and finance where privacy is paramount. Compliance with stringent data protection regulations is a challenge when data must be offloaded to the cloud for processing.

Enter Small Language Models (SLMs)

SLMs are designed to address the practical limitations of LLMs. They’re smaller, leaner, and more focused, built with efficiency and specificity in mind. Let’s explore why SLMs are gaining traction as a hot solution in the AI world.

1. Efficiency and Cost-Effectiveness

SLMs can be trained on smaller datasets, with significantly reduced computational requirements. They achieve this by focusing on specific domains or tasks rather than trying to be a one-size-fits-all model. The result? Lower training costs, less energy consumption, and models that can be deployed on a wider range of devices. This makes SLMs accessible to a much broader set of users—not just large corporations, but also startups, individual developers, and niche industries.

2. Speed and Low Latency

SLMs are incredibly nimble. With fewer parameters to work with, they can deliver responses in a fraction of the time required by an LLM. For applications requiring real-time interaction—think gaming, conversational interfaces, or voice-controlled devices—speed is key, and SLMs deliver. Their responsiveness allows them to integrate seamlessly with devices that require instantaneous user feedback.

3. Privacy and Security

Perhaps one of the biggest advantages of SLMs is their ability to be deployed locally, or “on the edge.” This means they can run on personal devices without the need for a continuous internet connection or sending data to the cloud. This enhances privacy, as sensitive data can stay on-device and doesn’t need to be transmitted anywhere. For applications involving medical records, financial data, or even personal messages, SLMs are a safer bet that can more easily align with strict privacy regulations.

4. Flexibility and Accessibility

SLMs can be customized for specific use cases far more easily than LLMs. Since they are smaller, training or fine-tuning them on targeted datasets is both cost-effective and feasible. This accessibility means that even small businesses or individual developers can innovate and apply AI to solve specific challenges, democratizing access to powerful AI technology.

Real-World Applications of SLMs

SLMs aren’t just a theoretical alternative; they’re already making waves across industries. Here are a few notable applications:

Healthcare

SLMs are empowering healthcare providers to develop smart solutions that can process medical information locally. For example, an SLM running on a healthcare professional’s tablet could help in diagnosing a condition by analyzing patient symptoms without ever having to upload data to a central server—preserving patient confidentiality and complying with regulations like HIPAA.

Finance

In the financial sector, privacy is paramount. SLMs are being used to analyze transactions in real time to detect fraud, all while ensuring customer data remains secure. Moreover, they can help with customer support chatbots that need to work quickly without compromising on sensitive financial data by sending it off to the cloud.

Manufacturing

Factories are using SLMs to monitor equipment performance and detect anomalies that might signal upcoming mechanical failures. Running these models on-site allows manufacturers to avoid sending operational data off-premises, reducing risks associated with data exposure.

Consumer Electronics

SLMs are powering smart thermostats, personal assistants, and wearable devices. These devices often need to respond instantly and must conserve energy. Running an SLM locally allows for on-device processing, which means faster responses without draining battery life or requiring constant connectivity.

The Future is Small (and Smart)

The trend towards smaller, more efficient models represents a fundamental shift in the AI landscape. It’s a shift away from one-size-fits-all solutions towards more agile, domain-specific models that offer increased performance, enhanced privacy, and broader accessibility. LLMs undoubtedly have their place in pushing the limits of what AI can achieve, but SLMs bring the technology into more people’s hands and make it more applicable to everyday needs.

SLMs aren’t just a miniaturized version of LLMs; they are a different category of innovation—one that brings AI capabilities closer to the edge, closer to where people interact with technology, and closer to solving specific, practical problems.

Conclusion

Large Language Models have been incredible in showcasing the possibilities of artificial intelligence. Their versatility and power have taken us into a new age of conversational AI and machine understanding. But in the real world, efficiency, speed, and privacy are often more important than sheer scale. That’s where Small Language Models come in. They offer a practical, accessible, and powerful alternative that fits neatly into scenarios where LLMs simply can’t.

As AI continues to grow and become integrated into more aspects of our lives, it’s clear that bigger isn’t always better. Sometimes, the future lies in something smaller, faster, and a little more focused—and that’s what makes Small Language Models so hot right now.